A Step-by-Step Introduction to How Machines Learn Like the Human Brain

Introduction

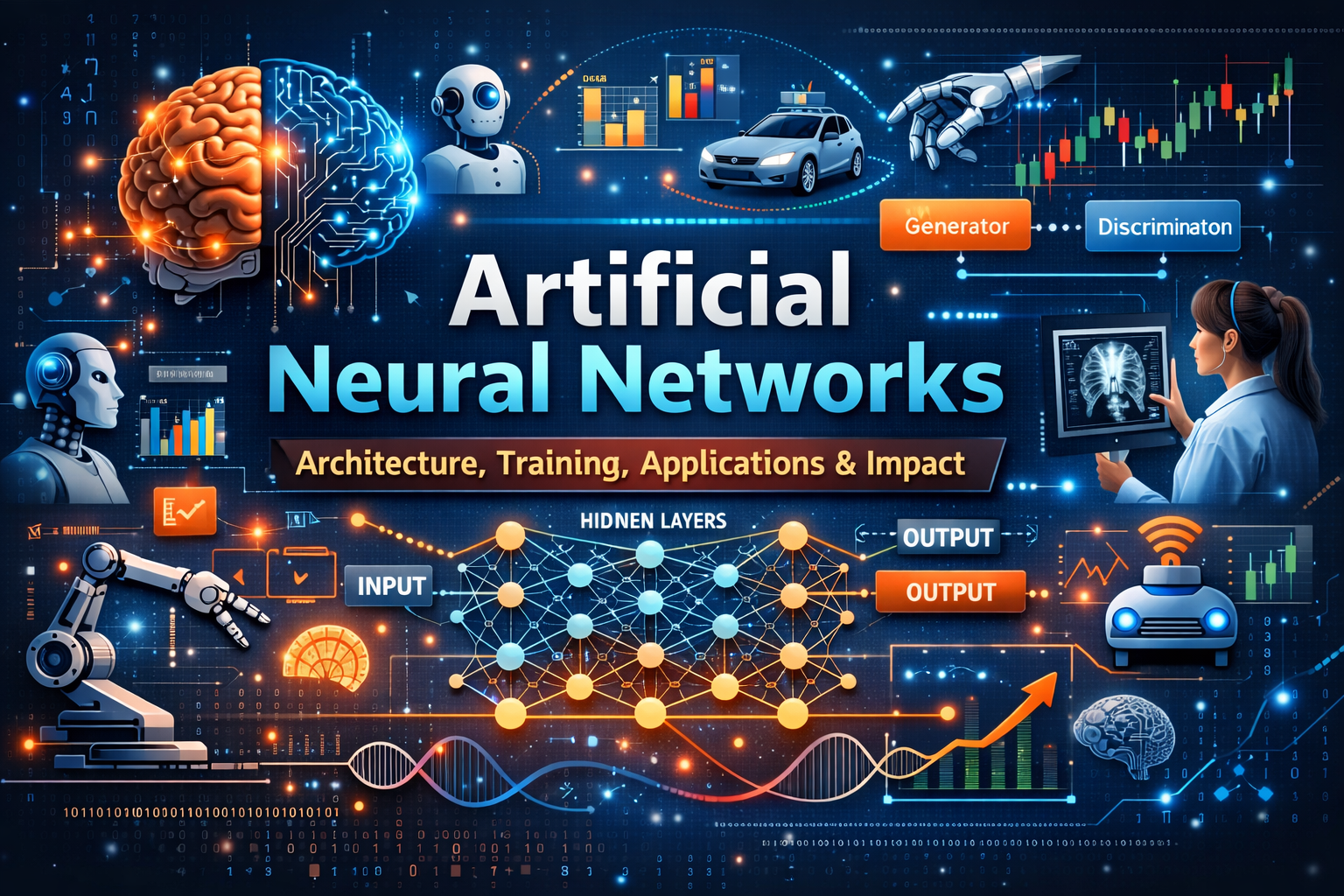

Artificial Neural Networks (ANNs) are the foundation of modern artificial intelligence and deep learning. They are computational models inspired by the human brain that learn patterns from data and improve themselves over time. Today, ANNs power technologies like voice assistants, self-driving cars, face recognition, recommendation systems, chatbots, and even medical diagnosis tools.

In simple terms, an ANN is a system that receives input, processes it in several internal layers, and produces an output based on learned experiences. Instead of being manually programmed for every task, ANNs use data to understand relationships and make predictions or decisions.

What is an Artificial Neural Network?

An Artificial Neural Network (ANN) is a machine learning model designed to simulate the way human brain cells (neurons) process and transmit information. It is built using interconnected nodes—called neurons—that work together to solve problems.

- ANNs identify patterns from data

- They improve accuracy through learning cycles

- They can handle tasks where traditional programs fail

- They are capable of working with noisy or incomplete information

Imagine feeding a system thousands of images of cats and dogs. Over time, the ANN learns features like shape, texture, eyes, ears, and tail positions. Eventually, it can correctly classify whether a new image contains a cat or a dog—even if it has never seen it before. This learning ability makes ANNs extremely powerful in real-world scenarios.

Biological Inspiration

ANNs are inspired by the structure of the biological nervous system. The human brain contains nearly 86 billion neurons interconnected through synapses. These neurons exchange electrical signals to process thoughts, store memory, and make decisions. In the same way, ANNs are built from artificial neurons that receive input, apply computation, and transmit a result to the next neuron.

Comparison: Biological vs Artificial Neurons

| Biological Neuron | Artificial Neuron |

|---|---|

| Receives signals from dendrites | Receives numerical input values |

| Processes information and fires signals | Computes weighted sum + activation |

| Passes output via axon to other neurons | Sends output to next layer via connections |

Why Are Neural Networks Important?

Neural networks are crucial because they solve problems that are difficult or impossible with traditional programming. Traditional algorithms need a clear set of rules. But many problems like speech, vision, and natural language do not follow exact rules—because data is noisy, inconsistent, or unpredictable.

ANNs learn patterns automatically from experience, making them the backbone of the modern AI revolution. They can recognize faces, translate languages, predict stock prices, detect diseases from X-rays, and even generate new images or text.

Architecture of Artificial Neural Networks

The architecture of an Artificial Neural Network defines how information flows inside the system. It includes individual processing units (neurons), how they connect, how input values are transformed, and how the network produces the final result. Understanding the architecture is important because the structure of the network directly influences its learning ability and accuracy.

1. Structure of an Artificial Neuron

An artificial neuron is the smallest building block of a neural network. It takes inputs, multiplies each input with a weight, adds a bias value, applies an activation function, and produces an output.

Output = Activation ( W1X1 + W2X2 + ... + WnXn + Bias )

Here, W = weights, X = inputs, and Bias adjusts the output to improve learning flexibility. The activation function makes the model capable of understanding non-linear patterns.

Key Components of a Neuron

- Inputs (X): Raw data or features provided to the neuron.

- Weights (W): Adjustable parameters that define importance of each input.

- Bias (b): A constant value added to shift activation results.

- Activation Function: Decides whether the neuron should activate or not.

- Output: Final processed value passed to next layer.

2. Activation Functions in ANN

Activation functions introduce non-linearity into neural networks. Without them, the network would only learn linear patterns, making it unsuitable for complex tasks like image recognition or language processing.

| Activation Function | Equation / Range | Where Used |

|---|---|---|

| Sigmoid | 0 to 1 | Binary classification |

| ReLU (Rectified Linear Unit) | max(0, x) | Deep learning models, CNNs |

| Tanh | -1 to 1 | Prevents saturation better than Sigmoid |

| Softmax | Converts values to probabilities | Multi-class classification |

3. Layers in Artificial Neural Networks

Layers are groups of neurons arranged in a specific order. Data flows from the first layer to the last, transforming at each step. The number of layers and neurons depends on the complexity of the problem.

- Input Layer: Receives raw data or features.

Example: pixel values of an image or numeric values in a dataset. - Hidden Layers: Process information using weights & activation functions.

More hidden layers = deeper network = higher learning capacity. - Output Layer: Produces final prediction or classification.

Example: Yes/No, Cat/Dog, numerical predictions.

Note:

When a network has more than one hidden layer, it is called a Deep Neural Network (DNN). This is the foundation of modern Deep Learning.

4. Forward Propagation (How Data Moves)

Forward propagation is the process where input data moves through all layers of the network to generate output.

- Input layer receives raw values

- Each neuron computes weighted sum + bias

- Activation function modifies the value

- Output is passed to the next layer

- Final output layer generates prediction

Example: A model predicting if a person will buy a product based on age, income, and online behavior.

The network learns from past purchase data and predicts future outcomes.

How Do Artificial Neural Networks Learn?

The learning process in an Artificial Neural Network involves continuously adjusting internal parameters (weights and bias) so that the predicted output becomes as close as possible to the actual value. This is done through mathematical operations that compare predictions with reality and reduce error step-by-step.

The three major components of ANN learning are:

- Loss Function – Measures how wrong the model is

- Backpropagation – Method to calculate error contribution of each neuron

- Gradient Descent – Optimization technique to adjust weights

1. Loss Function (Error Calculation)

Loss function represents the difference between predicted output and actual output. The goal of the network is to minimize this loss, meaning the prediction becomes more accurate over time.

| Loss Function | Formula / Purpose | Used In |

|---|---|---|

| Mean Squared Error (MSE) | Average of squared differences | Regression problems (numerical prediction) |

| Binary Cross-Entropy | Measures probability difference | Binary classification (Yes/No) |

| Categorical Cross-Entropy | Compares predicted probability distribution | Multi-class classification |

A high loss means the model is performing poorly. As learning continues, the loss value should gradually decrease. A well-trained model has a low loss, indicating good predictive performance.

2. Backpropagation (Error Distribution)

Backpropagation is the heart of neural network learning. After a prediction is made, the model calculates the loss. Then, it works backwards from the output to the input layer to determine how much each neuron contributed to the error.

- Calculate output and loss (error)

- Trace error backward layer-by-layer

- Identify which weights caused higher error

- Adjust weights to reduce error

- Repeat the cycle for every training example

Backpropagation is like finding where mistakes happened and correcting them. The more data we train on, the better the model becomes.

3. Gradient Descent (Optimization)

Gradient Descent is a mathematical optimization strategy used to update the model's weights. It moves the weight values in the direction that reduces loss the most.

Imagine being blindfolded on a mountain and trying to reach the lowest point (minimum error). Your best approach is to take small steps downhill based on the slope you feel. This is exactly how Gradient Descent works.

- Step uphill → Higher loss (bad)

- Step downhill → Lower loss (good)

- Keep stepping → Reach minimum loss

- Batch Gradient Descent – Updates after full dataset

- Stochastic Gradient Descent (SGD) – Updates after each record

- Mini-Batch Gradient Descent – Updates in small batches (most common)

4. Learning Rate

Learning rate is a hyperparameter that controls how much the weights change after each update. It decides the size of the step taken while descending towards minimum loss.

- Too High → Model jumps around, fails to find minimum

- Too Low → Model learns very slowly, takes too long to converge

- Just Right → Smooth and efficient learning

Selecting a correct learning rate is one of the most important steps in training neural networks.

5. Step-by-Step Example of Learning

Suppose an ANN predicts the house price based on area and location. The real price is ₹80 lakh, but the model predicts ₹60 lakh. The loss is calculated as the difference. Now the model adjusts weights and aims to predict 65, 70, 75, 78... until it reaches close to 80 lakh.

This gradual improvement is achieved by:

- Calculating loss

- Finding errors via backpropagation

- Updating weights using gradient descent

- Repeating the process until model is accurate

Types of Artificial Neural Networks

Artificial Neural Networks can be categorized into different types based on how data flows inside the network, how they remember information, and what kind of tasks they are designed to solve. Choosing the correct network type is important for achieving high accuracy and performance.

Below are the most commonly used neural network architectures in modern artificial intelligence:

1. Feedforward Neural Network (FFNN)

Feedforward Neural Networks are the simplest form of ANN where data moves in only one direction: from input layer to output layer. There is no backward flow and no memory of previous inputs.

Example: Predicting pass/fail from student marks

Input Layer → Hidden Layer → Output Layer

2. Convolutional Neural Network (CNN)

CNNs are designed to work with image and visual data. They automatically detect and extract important features like edges, patterns, shapes, and textures from images using convolution filters. CNNs are the backbone of modern computer vision technology.

- Recognizes patterns in images

- Works with pixels, spatial information

- Often combined with pooling layers for dimension reduction

- Face Recognition (phones, security)

- Automatic Vehicle Number Plate Recognition

- Medical disease detection (X-ray, MRI scans)

Image → Convolution → ReLU → Pooling → Fully Connected Layer → Output

3. Recurrent Neural Network (RNN)

Unlike FFNN, RNNs can remember previous outputs and use them as input for the next step. They work well with sequence-based data where order matters. These networks have loops in them to maintain memory.

Examples: Stock market trends, text completion, speech processing

Input (t1) → Output (t1)

↓

Input (t2) → Output (t2)

↓

Input (t3) → Output (t3)

(Memory flows forward)

4. Long Short-Term Memory (LSTM)

LSTM is an advanced form of RNN designed to solve the issue of long-term dependency and vanishing gradient problems. It contains gates (input, output, forget gate) that manage memory efficiently and decide what information to keep or discard.

- Remembers long-term patterns

- Handles complex sequences

- Prevents memory loss in training

Examples in real-world:

- Google Translate

- Predictive text keyboard

- Music generation from patterns

5. Autoencoders

Autoencoders are neural networks used to compress data (encoding) and expand it back to original form (decoding). They are widely used to remove noise from data, reduce dimensionality, and improve data quality for machine learning models.

- Image de-noising (removing grains from photos)

- Data compression for storage

- Anomaly detection (fraud, defects)

Data → Encoder → Compressed Form → Decoder → Output (Restored Input)

6. Generative Adversarial Networks (GANs)

GANs are powerful networks that generate new data that looks similar to real data. They contain two networks: Generator and Discriminator, competing against each other like a game.

Discriminator: Detects if data is real or fake

Both improve each other during training

- AI face generation (not real people's pictures)

- Video game character design

- Deepfake creation (also used in ethical limits)

- Artwork and image enhancement

Generator → Creates Fake Data → Discriminator → True or False

▲ |

|_____________________________________|

Feedback Loop (Training)

📌 Comparison of ANN Types

| Network Type | Memory | Data Type | Best Use Case |

|---|---|---|---|

| FFNN | No | Numeric | Simple predictions |

| CNN | No | Images, Pixels | Computer Vision |

| RNN | Yes | Sequential | Text, Time-series |

| LSTM | Long Memory | Sequential | Large language tasks |

| Autoencoder | N/A | Image/Data | Compression & Noising |

| GAN | Partial | Image/Data | Content Generation |

Deep Learning and Artificial Neural Networks

Deep Learning is a subfield of Machine Learning that focuses on training multi-layer neural networks to learn patterns from large datasets. Artificial Neural Networks form the basic foundation of deep learning models, evolving into complex architectures like CNNs, RNNs, and Transformer Networks. These networks can learn hierarchical representations and deliver highly accurate predictions in real-world applications.

Relationship Between Deep Learning & ANNs

Deep Learning extends Artificial Neural Networks by adding:

- Many hidden layers (deep architecture)

- Massive training datasets

- Powerful hardware (GPUs/TPUs)

- Advanced optimization algorithms

- Automated feature extraction

Unlike traditional Machine Learning, which requires manual feature engineering, deep learning models automatically learn features, reducing human effort and improving performance on complex problems.

Challenges in Training ANNs

Training neural networks is powerful but comes with challenges:

- Overfitting: Model memorizes training data and performs poorly on new data.

- Underfitting: Model lacks complexity to understand the patterns.

- Vanishing/Exploding Gradients: Gradients shrink or grow uncontrollably in deep networks.

- High Computation Cost: Requires GPUs and parallel processing.

- Data Dependency: Requires large, labeled datasets for accurate learning.

Solutions & Optimization Techniques

- Use Dropout to reduce overfitting

- Apply Batch Normalization to stabilize training

- Choose modern activation functions like ReLU, Leaky ReLU, GELU

- Use Early Stopping to avoid training too long

- Apply Data Augmentation to increase dataset size

- Use Adam / RMSprop optimizers instead of classical gradient descent

Transfer Learning in ANN

Transfer Learning is a technique where a model trained on a large dataset is reused for a different, smaller dataset. Instead of training from scratch, we use an already trained model and fine-tune it.

Advantages:

- Faster training time

- Higher accuracy with less data

- Useful in medical, research, and industrial tasks

Example: Using a pre-trained CNN like VGG16 or ResNet for medical image classification with limited X-ray images.

Hyperparameter Tuning in ANNs

Hyperparameters are settings that control the learning behavior of the network. Tuning these parameters is essential to achieve the best model accuracy.

| Hyperparameter | Meaning | Effect on Training |

| Learning Rate | Step size in gradient descent | Too high → unstable, Too low → slow |

| Batch Size | Samples per training step | Small → more stable, Large → faster |

| Epochs | Number of full training cycles | More epochs = better accuracy (risk of overfitting) |

| Activation Function | Introduces non-linearity | Determines learning capability |

| Optimizer | Optimization algorithm | Controls speed and stability |

How ANN Training Works Step-by-Step

- Data is collected and preprocessed (normalization, scaling, cleaning)

- Weights are initialized (random small values)

- Forward pass is executed to generate output

- Loss is calculated using a loss function

- Backpropagation updates weights to reduce loss

- Repeat steps for several epochs

- Validate model on unseen validation data

- Deploy model for real-world predictions

Modern Trends & Future of ANNs

- Explainable AI (XAI): Improving transparency in ANN decisions

- Transformer Networks: Replace RNNs for text & sequence problems

- Generative Models: GANs and diffusion models for realistic image/video generation

- Edge AI: Running neural networks on mobile/IoT devices

- Neural Architecture Search (NAS): AI that designs neural networks automatically

With ongoing advancements, Artificial Neural Networks are becoming more efficient, explainable, and widely accessible, powering industries like healthcare, security, autonomous driving, climate science, and personalized recommendation systems.

Types of Neuron Connections and Neural Network Architectures

Artificial Neural Networks can be categorized based on how neurons are connected, how information flows, and how layers interact. The architecture and connection pattern determines the network's capability, memory, complexity, and real-world application suitability.

Types of Neuron Connections

Neural networks can connect neurons in the following ways:

| Connection Type | Description | Applications |

| Feedforward Connections | Signals move in one direction: input → hidden → output, no loops | Classification, regression, pattern recognition |

| Feedback Connections | Outputs are fed back to previous layers to improve predictions | Stability control, learning refinement |

| Recurrent Connections | Neurons loop information to themselves or previous layers (memory) | Time-series, text, speech, sequential learning tasks |

| Lateral Connections | Neurons in the same layer are interconnected | Adaptive filtering, competitive learning, self-organizing maps |

These connection styles form the foundation of different network architectures used in deep learning systems.

Major ANN Architectures

1. Feedforward Neural Network (FNN)

The simplest form where data flows only forward. It has input, hidden, and output layers without loops.

- Used for simple predictions

- No memory of previous inputs

- Fast and easy to train

2. Convolutional Neural Network (CNN)

Designed to process grid-like data such as images. CNNs automatically extract features like edges, shapes, and objects using convolutional filters, making them ideal for visual tasks.

Key Components:

- Convolution Layer (feature extraction)

- Pooling Layer (dimensionality reduction)

- Fully Connected Layer (final prediction)

Applications: Image recognition, CCTV surveillance, medical imaging, autonomous vehicles.

3. Recurrent Neural Network (RNN)

RNNs have loops that allow data to persist, enabling memory of previous states for sequence prediction. They are useful for problems where context and order matter.

Challenges: Vanishing gradient, difficulty remembering long-term dependencies.

Applications: Chatbots, text generation, sentiment analysis, weather forecasting.

4. Long Short-Term Memory (LSTM)

LSTM is a special type of RNN that solves the vanishing gradient problem using gates that manage memory.

Why better than RNN? Retains information for longer time frames.

Applications: Language translation, speech recognition, stock market predictions.

5. GRU (Gated Recurrent Unit)

Similar to LSTM but with simplified gates, making it computationally faster and easier to train.

Good choice for: Real-time applications and low-power hardware (Mobiles, IoT).

6. Generative Adversarial Network (GAN)

GANs consist of two networks: a Generator (creates fake data) and a Discriminator (detects fake data). They compete in a training scenario, eventually creating realistic data.

Applications: Image generation, deepfake creation, art synthesis, video frame enhancement.

7. Transformer Networks

Transformative architecture that replaces recurrence with self-attention mechanism. Transformers analyze relationships between all elements of input simultaneously, making them ideal for large-scale language models.

Advantages: Faster training, parallel processing, handles long-range dependencies.

Applications: ChatGPT, BERT, Google Translate, content generation, speech models.

8. Autoencoders

Autoencoders learn to compress data into a smaller representation and then reconstruct it back. They are used for anomaly detection, noise removal, and dimensionality reduction.

Applications: Cybersecurity threat detection, medical anomaly detection, feature extraction.

9. Self-Organizing Maps (SOM)

SOMs are unsupervised networks that group similar data together and visualize high-dimensional information on a 2D map.

Applications: Customer segmentation, recommendation systems, data clustering.

Summary of Architectures and Best Use Cases

| Architecture | Best For | Reason |

| CNN | Images, videos | Spatial feature extraction |

| RNN / LSTM | Text, speech, time-series | Memory for sequences |

| GAN | Image/Audio generation | Generative learning |

| Transformers | Large-scale NLP | Parallel attention-based learning |

| Autoencoder | Compression, anomaly detection | Latent representation learning |

With the right architecture selection, Artificial Neural Networks can solve problems ranging from recognizing objects in images to generating human-like conversations and accelerating scientific research.

Real-World Applications of Artificial Neural Networks

Artificial Neural Networks have become a foundational technology in modern artificial intelligence. Their ability to learn patterns, recognize objects, understand text, and make predictions allows them to power solutions across almost every industry. From healthcare and automation to banking and entertainment, ANN-driven systems are transforming how decisions are made and how users interact with technology.

Applications of ANNs by Industry

1. Healthcare & Medical Science

ANNs enable machines to learn from medical data and assist doctors in diagnosis and patient care.

- Medical image analysis using CNNs (X-rays, CT scans, MRI)

- Early prediction of diseases (cancer, diabetes, neurological disorders)

- Drug discovery and molecular behavior prediction

- Personalized treatment recommendations

- Robotic surgery assistance systems

Impact: Faster diagnosis, reduced error rate, life-saving predictive healthcare solutions.

2. Finance & Banking

Financial institutions leverage ANNs to detect fraud, analyze trends, and make data-driven decisions.

- Credit scoring and loan approval automation

- Fraud transaction detection (using anomaly detection models)

- Stock market price prediction using LSTM networks

- ATM surveillance and identity verification systems

Impact: Reduced financial risk, safer transactions, improved investment decisions.

3. Transportation & Autonomous Vehicles

Self-driving cars heavily rely on ANNs for environment perception and navigation.

- Traffic pattern recognition and route optimization

- Driver alertness monitoring using facial recognition

- Pedestrian detection and collision avoidance with CNNs

- Autonomous drones for delivery and disaster assistance

Impact: Improved road safety, reduced travel time, progress toward self-driving transportation.

4. E-Commerce & Retail

ANNs help businesses understand customer behavior and personalize the shopping journey.

- Product recommendation systems (like Amazon & Flipkart)

- Dynamic pricing optimization based on buying trends

- Voice search and chatbot-based support

- Demand forecasting for inventory planning

Impact: Better customer engagement, increased sales conversions, improved inventory management.

5. Cybersecurity

ANNs identify suspicious network patterns to protect systems from attacks.

- Intrusion detection systems using anomaly detection methods

- Malware and ransomware identification using classification models

- Biometric authentication (face, fingerprint, iris recognition)

- Defense against phishing and social engineering attacks

Impact: Safer digital ecosystem, reduced frauds, secured cloud infrastructure.

6. Agriculture & Smart Farming

ANNs power modern agricultural systems to reduce waste and increase productivity.

- Crop disease detection using image classification

- Smart irrigation prediction systems using time-series data

- Yield forecasting based on soil and weather patterns

- Automated weed detection using deep learning

Impact: Lower resource usage, higher productivity, climate-adaptive farming.

7. Entertainment & Media

ANNs enhance the visual and audio experience of content creation.

- Music and art generation using GANs

- Video upscaling and noise reduction for OTT platforms

- Game character intelligence in NPC behavior

- Speech-to-text and real-time dubbing systems

Impact: Creative innovation, adaptive gaming, and immersive digital media.

8. Education & Ed-Tech

- Automated grading and exam analysis

- AI tutors that adapt to student learning patterns

- Personalized learning dashboards

- Career prediction models

Impact: Self-paced learning, reduced teacher workload, improved student outcomes.

9. Defense & Military

- Target recognition in high-risk zones

- Autonomous surveillance drones

- Predictive maintenance for military equipment

- Border security monitoring with anomaly detection

Impact: Strategic advantage, faster decision-making, enhanced surveillance.

Overall Impact of ANN in Modern Technology

Artificial Neural Networks are revolutionizing how data is interpreted. They not only automate complicated tasks but also provide insights that humans might miss. With increasing computing power and access to large datasets, ANN-based systems are becoming more accurate and reliable every year.

- Reduction in human errors

- Faster decision-making

- Better prediction and forecasting

- Cost reduction in industries

- Scalability and automation

Overall, ANNs are shaping a future where machines learn from experience, assist humans, and improve life across the globe. With ongoing research, Artificial Neural Networks may soon become as vital to everyday life as electricity and the internet.